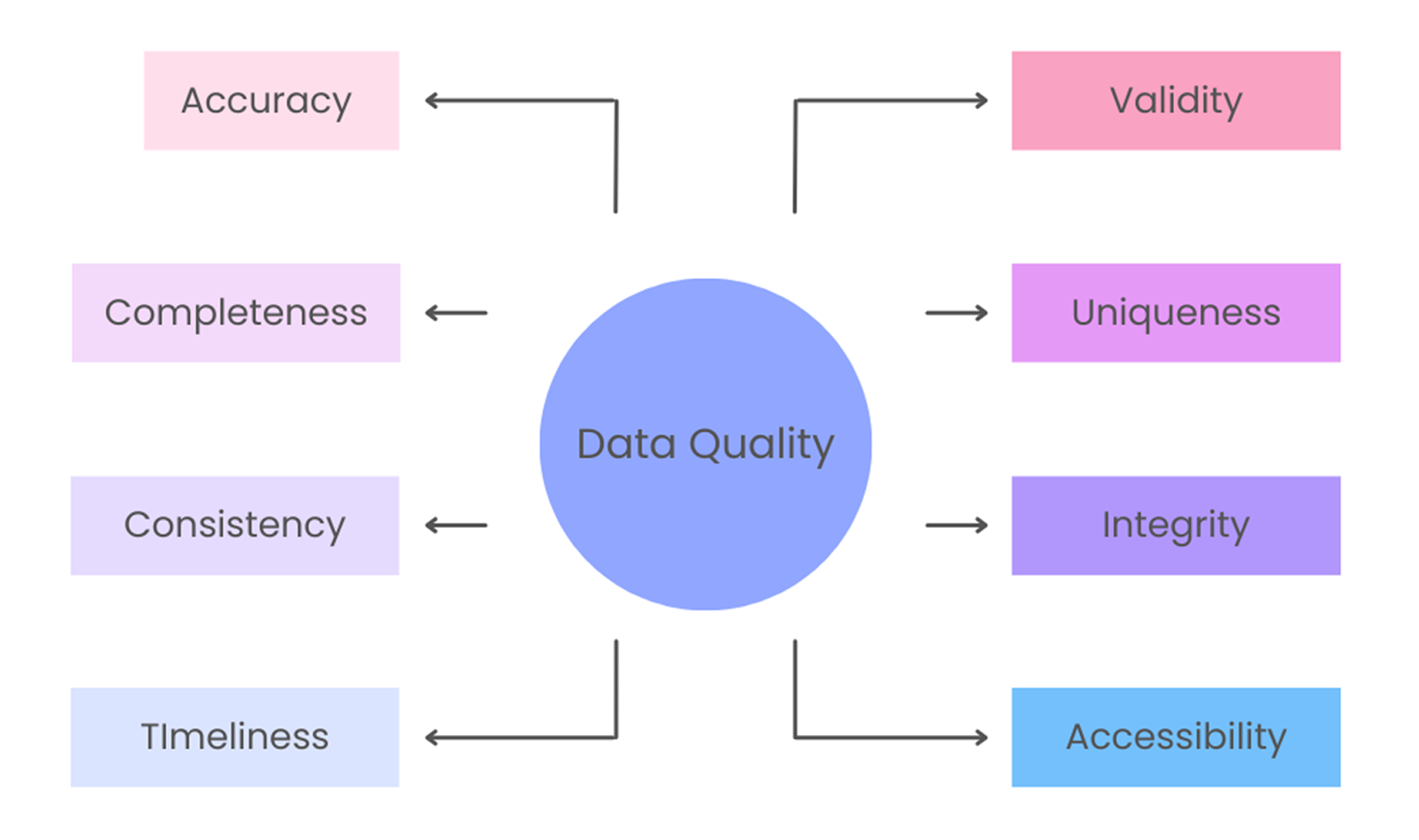

8 Dimensions of Data Quality

Good data quality is measured through specific dimensions that ensure the data is accurate, reliable, and useful. By focusing on these dimensions, businesses can maintain high-quality data that supports accurate decisions, smooth operations, and long-term success.

Here are the eight key dimensions explained in simple terms:

- Accuracy: Accuracy means the data matches real-world information and is free of mistakes. For example, a customer’s phone number or email address should be correct. Accurate data helps businesses make better decisions and avoid errors.

- Completeness: Completeness ensures all necessary information is present. For instance, a sales record should include details like the product, customer, and transaction amount. Missing information can make the data less useful and harder to analyze.

- Consistency: Consistency means the same data is uniform across different systems. For example, a customer’s name should appear the same in both the billing and CRM systems. Inconsistent data can create confusion and reduce trust.

- Timeliness: Timeliness ensures that data is up-to-date and available when needed. For example, stock levels in an inventory system should reflect the current quantities. Outdated data can lead to poor decisions and missed opportunities.

- Validity: Validity checks whether the data follows the required rules and formats. For example, dates should be in “YYYY-MM-DD” format, and phone numbers should have the correct number of digits. Invalid data can cause errors and slow down processes.

- Uniqueness: Uniqueness ensures there are no duplicates in the data. For example, a customer should only have one profile in the system. Duplicate records waste resources and can lead to incorrect analysis.

- Integrity: Integrity ensures that data relationships are accurate and maintained. For example, every order should have a valid customer ID that matches an entry in the customer database. Broken links between data can lead to incomplete or incorrect insights.

- Accessibility: Accessibility means data is easy to find and use for its purpose. For example, employees should be able to access customer records in a secure and user-friendly system. If data is hard to access, it slows down work and reduces its effectiveness.

| Dimension | Technique | Explanation |

|---|---|---|

| Accuracy | Regex Checks | Validate formats to match the expected pattern. |

| Range Checks | Ensure numeric values fall within predefined limits of minimum and maximum allowed values. | |

| Completeness | Null Checks | Identify missing values in critical fields. |

| Row Validation | Ensure that all required columns in a record are populated. | |

| Lookup Tables | Fill gaps for missing values, such as coordinates for branches. | |

| Consistency | Data Transformation Rules | Standardize formats like YYYY-MM-DD for dates. |

| Rounding Rules | Ensure numeric fields maintain uniform precision (e.g., two decimal places). | |

| Timeliness | Recency Checks | Compare date fields with the current date to ensure they reflect recent updates. |

| Timestamps | Track when data was last updated and prioritize the latest records. | |

| Validity | Field Validations | Enforce correct lengths and formats for fields. |

| Domain Validation Rules | Confirm values, such as valid names or IDs. | |

| Uniqueness | Duplicate Detection | Algorithms to identify and remove duplicate entries. |

| Distinct Row Checks | Ensure that all records are unique based on key identifiers. | |

| Integrity | Foreign Key Validations | Confirm relationships between related fields. |

| Relationship Verification | Ensuring each record references valid entries in linked tables. | |

| Accessibility | Availability Testing | Confirm that datasets (e.g., FTP files, MySQL database) are reachable. |

| Validate User Permissions | Ensure authorized users can access the data in secure and user-friendly ways. |

Methods to Improve Data Quality

Improving data quality requires a combination of proactive monitoring, clear rules, and the right tools. A structured approach starting with profiling, validating data, and cleaning errors delivers high-quality data that supports better decisions and smooth operations.

Below is an example of common data quality problems that data profiling can identify in a loan dataset, along with practical examples.

| Data Quality Issue | Example |

|---|---|

| Invalid Value | Loan Status should be “Approved” or “Rejected,” but the current value is “Unknown.” |

| Cultural Rule Conformity | Loan Date is given as “2023/12/01” or “01-12-2023,” but the required format is “YYYY-MM-DD.” |

| Value Out of Required Range | Loan Amount is recorded as -500 or 1,000,000 when the allowed range is $1,000 to $500,000. |

| Verification | Customer ID “CUST00123” does not exist in the Customer Master Table. |

| Format Inconsistency | Loan ID is written as “LOAN00123” in one system and 123LOAN in another. |

Here are some simple and effective methods to enhance data quality:

- Data Profiling: Data profiling helps you find missing values, duplicate entries, or inconsistent formats. For example, you can quickly spot customer records missing phone numbers or addresses. By regularly profiling your data, you can address issues at an early stage.

- Validate Data: Set up validation rules to ensure data is accurate as it’s entered or imported. For instance, ensure email addresses follow the correct format, dates match the required structure, and mandatory fields are filled.

- Clean Data: Cleansing is the process of fixing errors in your current data. This includes filling in missing information, correcting invalid formats, and removing duplicate records. For example, you might standardize addresses by correcting spelling mistakes or merging duplicate customer profiles.

- Standardize Formats: Use consistent formats and units across all systems to avoid confusion. For instance, ensure dates use a single format like YYYY-MM-DD and amounts are in the same currency. Standardized data makes integration and analysis smoother and more accurate.

- Data Audits: Schedule regular audits to check for issues like outdated information, missing fields, or inconsistencies. For example, you can review customer records to ensure their contact details are still current.

- Automation: Use automation tools to monitor and improve data quality in real time. Automated systems can validate new entries, flag inconsistencies, and correct common errors.

- Data Governance: Create clear rules for how data is collected, stored, and maintained. Assign specific roles to ensure accountability and provide guidelines to standardize practices across teams.

- Data Quality Tools: Specialized tools like Talend or Informatica make it easier to detect and fix errors. These tools can automate profiling, cleansing, and validation tasks, allowing to manage large datasets efficiently.